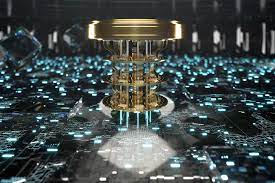

Quantum computing is a new type of computing that uses the principles of quantum physics to process and store information. Traditional computers, like the one you’re using now, use bits to store and manipulate information. A bit can be either a 0 or a 1, representing two possible states.

In contrast, quantum computers use quantum bits, or qubits. Qubits can represent not just 0 or 1, but a superposition of both states simultaneously. This means that a qubit can be both 0 and 1 at the same time, thanks to a concept called superposition. This allows quantum computers to perform many calculations simultaneously, which gives them the potential to solve certain problems much faster than classical computers.

Another important concept in quantum computing is entanglement. Entanglement allows qubits to be connected in such a way that the state of one qubit is dependent on the state of another, no matter the distance between them. This property enables quantum computers to perform complex operations on multiple qubits simultaneously, making them more powerful for certain types of computations.

By harnessing these quantum properties, quantum computers can potentially solve complex problems that are currently impractical or impossible for classical computers. They have the potential to revolutionize fields like cryptography, optimization, drug discovery, and simulations, among others.

It’s worth noting that quantum computing is still an emerging technology, and there are many challenges to overcome before we have practical and widely usable quantum computers. However, scientists and researchers are making exciting progress in this field, and we may see significant advancements in the coming years.